ai-agents-for-beginners

(点击上方图片观看本课程视频)

人工智能代理中的元认知

引言

欢迎学习人工智能代理中的元认知课程!本章专为那些对人工智能代理如何思考其自身思维过程感到好奇的初学者设计。学完本课程后,您将理解核心概念,并掌握实际示例,以便在人工智能代理设计中应用元认知。

学习目标

完成本课程后,您将能够:

- 理解代理定义中推理循环的含义。

- 使用规划和评估技术来帮助自我纠正代理。

- 创建能够操作代码以完成任务的自己的代理。

元认知简介

元认知指的是涉及思考自身思维的更高阶认知过程。对于人工智能代理而言,这意味着能够根据自我意识和过往经验评估和调整其行动。元认知,或“思考关于思考”,是代理式人工智能系统发展中的一个重要概念。它涉及人工智能系统意识到其自身的内部过程,并能够相应地监控、调节和调整其行为。就像我们审时度势或解决问题时所做的那样。这种自我意识可以帮助人工智能系统做出更好的决策,识别错误,并随着时间的推移提高其性能——这又回到了图灵测试以及关于人工智能是否会取代人类的争论。

在代理式人工智能系统的背景下,元认知可以帮助解决以下几个挑战:

- 透明度:确保人工智能系统能够解释其推理和决策。

- 推理:增强人工智能系统综合信息并做出明智决策的能力。

- 适应性:允许人工智能系统适应新环境和不断变化的条件。

- 感知:提高人工智能系统识别和解释来自其环境的数据的准确性。

什么是元认知?

元认知,或“思考关于思考”,是一种更高阶的认知过程,涉及对自身认知过程的自我意识和自我调节。在人工智能领域,元认知使代理能够评估和调整其策略和行动,从而提高解决问题和决策的能力。通过理解元认知,您可以设计出不仅更智能,而且更具适应性和效率的人工智能代理。在真正的元认知中,您会看到人工智能明确地推理其自身的推理过程。

示例:“我优先选择了更便宜的航班,因为……我可能错过了直飞航班,所以让我重新检查一下。” 跟踪它选择某个路线的方式或原因。

- 注意到它犯了错误,因为它过度依赖了上次的用户偏好,所以它修改了它的决策策略,而不仅仅是最终的推荐。

- 诊断模式,例如:“每当我看到用户提到‘太拥挤’时,我不应该只删除某些景点,还应该反思我的选择‘热门景点’的方法存在缺陷,如果我总是按受欢迎程度排名的话。”

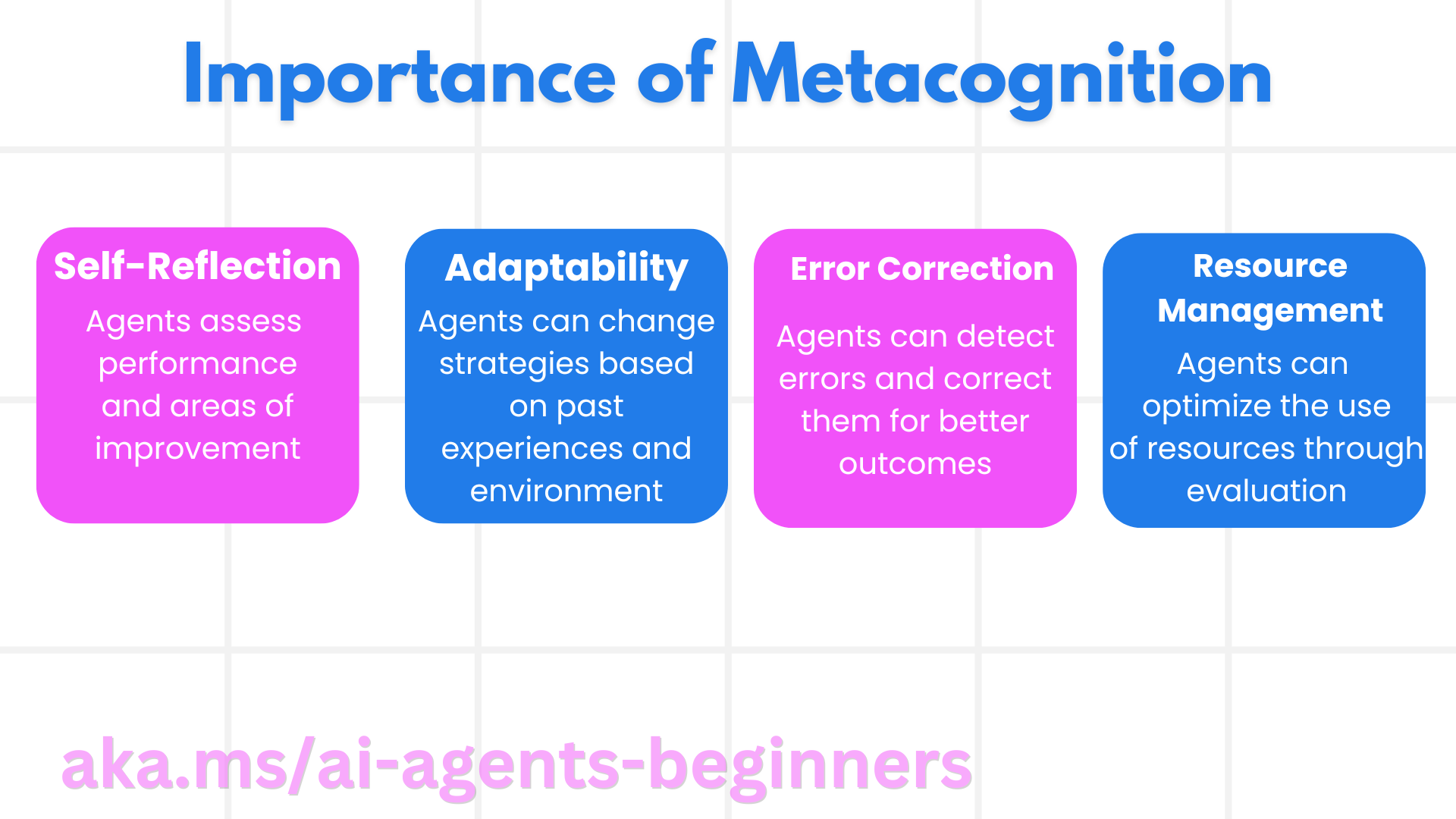

元认知在人工智能代理中的重要性

元认知在人工智能代理设计中扮演着至关重要的角色,原因如下:

- 自我反思:代理可以评估自身的表现并找出需要改进的领域。

- 适应性:代理可以根据过往经验和不断变化的环境修改其策略。

- 错误纠正:代理可以自主检测并纠正错误,从而获得更准确的结果。

- 资源管理:代理可以通过规划和评估其行动来优化资源使用,例如时间和计算能力。

人工智能代理的组成部分

在深入了解元认知过程之前,了解人工智能代理的基本组成部分至关重要。人工智能代理通常由以下部分组成:

- 角色:代理的个性和特征,定义了它如何与用户互动。

- 工具:代理可以执行的能力和功能。

- 技能:代理拥有的知识和专业技能。

这些组件协同工作,创建一个可以执行特定任务的“专业单元”。

示例:考虑一个旅行代理服务,它不仅规划您的假期,还根据实时数据和过往客户旅程经验调整其路径。

示例:旅行代理服务中的元认知

想象一下您正在设计一个由人工智能驱动的旅行代理服务。这个代理,“旅行代理”,协助用户规划他们的假期。为了整合元认知,“旅行代理”需要根据自我意识和过往经验评估和调整其行动。以下是元认知可能发挥作用的方式:

当前任务

当前任务是帮助用户规划一次巴黎之旅。

完成任务的步骤

- 收集用户偏好:询问用户他们的旅行日期、预算、兴趣(例如,博物馆、美食、购物)以及任何具体要求。

- 检索信息:搜索符合用户偏好的航班选项、住宿、景点和餐厅。

- 生成推荐:提供包含航班详情、酒店预订和建议活动的个性化行程。

- 根据反馈调整:征求用户对推荐的反馈并进行必要的调整。

所需资源

- 访问航班和酒店预订数据库。

- 巴黎景点和餐厅信息。

- 来自先前交互的用户反馈数据。

经验与自我反思

“旅行代理”使用元认知来评估其表现并从过往经验中学习。例如:

- 分析用户反馈:“旅行代理”审查用户反馈以确定哪些推荐受到好评,哪些没有。它相应地调整其未来的建议。

- 适应性:如果用户之前提到不喜欢拥挤的地方,“旅行代理”将来将避免在高峰时段推荐热门旅游景点。

- 错误纠正:如果“旅行代理”在过去的预订中犯了错误,例如推荐了一家已满的酒店,它会学会更严格地检查可用性,然后再进行推荐。

实际开发人员示例

以下是“旅行代理”代码在结合元认知时可能呈现的简化示例:

class Travel_Agent:

def __init__(self):

self.user_preferences = {}

self.experience_data = []

def gather_preferences(self, preferences):

self.user_preferences = preferences

def retrieve_information(self):

# Search for flights, hotels, and attractions based on preferences

flights = search_flights(self.user_preferences)

hotels = search_hotels(self.user_preferences)

attractions = search_attractions(self.user_preferences)

return flights, hotels, attractions

def generate_recommendations(self):

flights, hotels, attractions = self.retrieve_information()

itinerary = create_itinerary(flights, hotels, attractions)

return itinerary

def adjust_based_on_feedback(self, feedback):

self.experience_data.append(feedback)

# Analyze feedback and adjust future recommendations

self.user_preferences = adjust_preferences(self.user_preferences, feedback)

# Example usage

travel_agent = Travel_Agent()

preferences = {

"destination": "Paris",

"dates": "2025-04-01 to 2025-04-10",

"budget": "moderate",

"interests": ["museums", "cuisine"]

}

travel_agent.gather_preferences(preferences)

itinerary = travel_agent.generate_recommendations()

print("Suggested Itinerary:", itinerary)

feedback = {"liked": ["Louvre Museum"], "disliked": ["Eiffel Tower (too crowded)"]}

travel_agent.adjust_based_on_feedback(feedback)

为什么元认知很重要

- 自我反思:代理可以分析其表现并找出改进的领域。

- 适应性:代理可以根据反馈和不断变化的条件修改策略。

- 错误纠正:代理可以自主检测并纠正错误。

- 资源管理:代理可以优化资源使用,例如时间和计算能力。

通过整合元认知,“旅行代理”可以提供更个性化和准确的旅行推荐,从而提升整体用户体验。

2. 代理中的规划

规划是人工智能代理行为的关键组成部分。它涉及勾勒实现目标所需的步骤,考虑当前状态、资源和可能的障碍。

规划的要素

- 当前任务:清晰定义任务。

- 完成任务的步骤:将任务分解为可管理的步骤。

- 所需资源:识别必要的资源。

- 经验:利用过往经验为规划提供信息。

示例:以下是“旅行代理”需要采取的步骤,以有效地协助用户规划旅行:

“旅行代理”的步骤

- 收集用户偏好

- 询问用户有关其旅行日期、预算、兴趣和任何特定要求的详细信息。

- 示例:“您计划何时旅行?” “您的预算范围是多少?” “您在度假期间喜欢什么活动?”

- 检索信息

- 根据用户偏好搜索相关的旅行选项。

- 航班:在用户预算和首选旅行日期内寻找可用的航班。

- 住宿:查找符合用户对位置、价格和设施偏好的酒店或租赁物业。

- 景点和餐厅:识别符合用户兴趣的热门景点、活动和餐饮选项。

- 生成推荐

- 将检索到的信息编译成个性化行程。

- 提供航班选项、酒店预订和建议活动等详细信息,确保根据用户偏好量身定制推荐。

- 向用户呈现行程

- 与用户分享建议的行程以供他们审查。

- 示例:“这是您巴黎之行的建议行程。它包括航班详情、酒店预订以及推荐的活动和餐厅列表。请告诉我您的想法!”

- 收集反馈

- 征求用户对建议行程的反馈。

- 示例:“您喜欢航班选项吗?” “酒店是否适合您的需求?” “您想添加或删除任何活动吗?”

- 根据反馈调整

- 根据用户反馈修改行程。

- 对航班、住宿和活动推荐进行必要的更改,以更好地匹配用户偏好。

- 最终确认

- 向用户呈现更新后的行程以进行最终确认。

- 示例:“我已根据您的反馈进行了调整。这是更新后的行程。一切都好吗?”

- 预订并确认预订

- 一旦用户批准行程,即可进行航班、住宿和任何预先计划的活动预订。

- 将确认详情发送给用户。

- 提供持续支持

- 在用户旅行之前和期间,随时为他们提供任何更改或额外请求的帮助。

- 示例:“如果您在旅行期间需要任何进一步的帮助,请随时与我联系!”

互动示例

class Travel_Agent:

def __init__(self):

self.user_preferences = {}

self.experience_data = []

def gather_preferences(self, preferences):

self.user_preferences = preferences

def retrieve_information(self):

flights = search_flights(self.user_preferences)

hotels = search_hotels(self.user_preferences)

attractions = search_attractions(self.user_preferences)

return flights, hotels, attractions

def generate_recommendations(self):

flights, hotels, attractions = self.retrieve_information()

itinerary = create_itinerary(flights, hotels, attractions)

return itinerary

def adjust_based_on_feedback(self, feedback):

self.experience_data.append(feedback)

self.user_preferences = adjust_preferences(self.user_preferences, feedback)

# Example usage within a booing request

travel_agent = Travel_Agent()

preferences = {

"destination": "Paris",

"dates": "2025-04-01 to 2025-04-10",

"budget": "moderate",

"interests": ["museums", "cuisine"]

}

travel_agent.gather_preferences(preferences)

itinerary = travel_agent.generate_recommendations()

print("Suggested Itinerary:", itinerary)

feedback = {"liked": ["Louvre Museum"], "disliked": ["Eiffel Tower (too crowded)"]}

travel_agent.adjust_based_on_feedback(feedback)

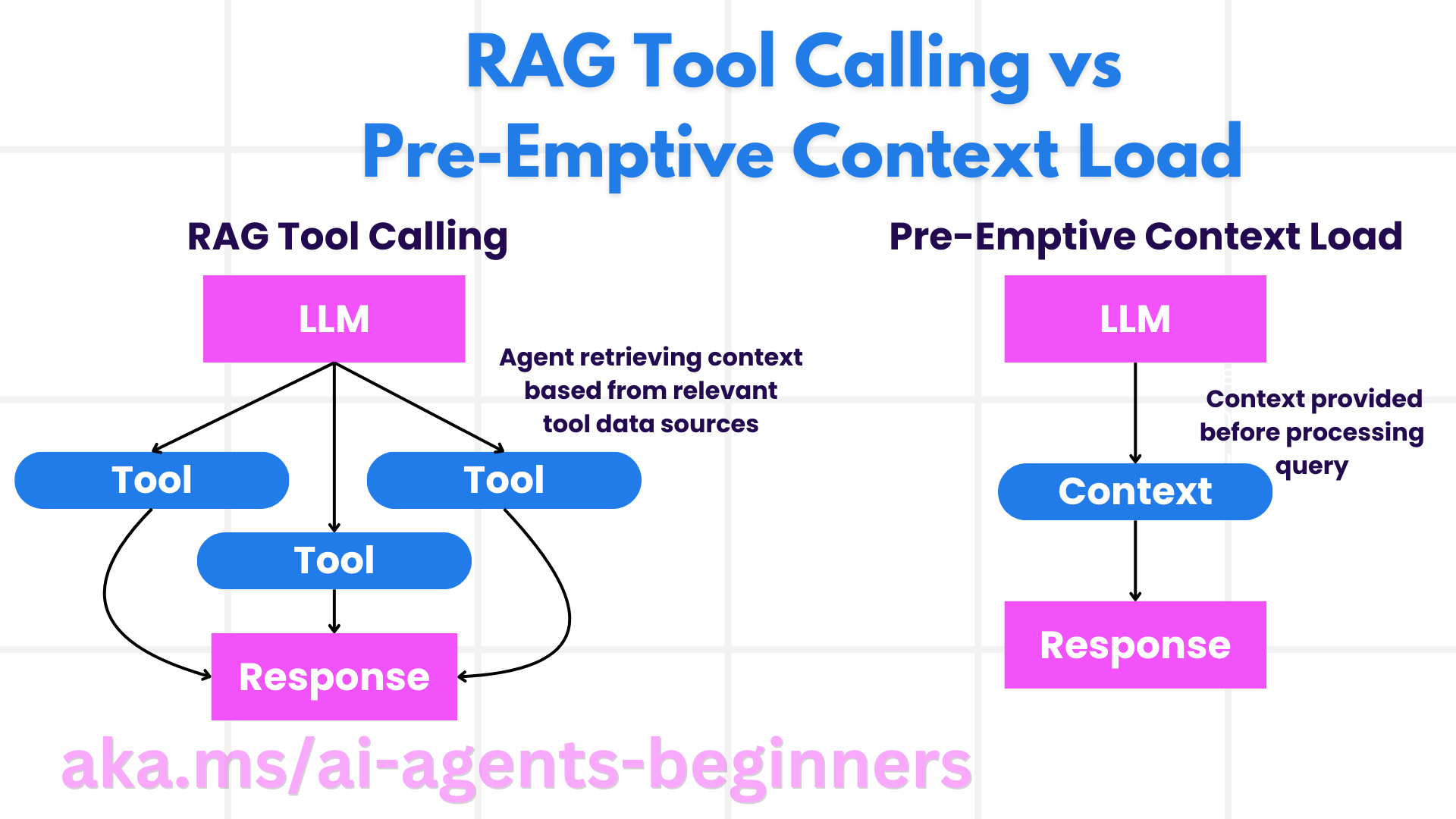

3. 纠正性 RAG 系统

首先,让我们从理解 RAG 工具和预加载上下文之间的区别开始。

检索增强生成 (RAG)

RAG 将检索系统与生成模型相结合。当发出查询时,检索系统从外部源获取相关文档或数据,然后将这些检索到的信息用于增强生成模型的输入。这有助于模型生成更准确和上下文相关的响应。

在 RAG 系统中,代理从知识库中检索相关信息并使用它来生成适当的响应或动作。

纠正性 RAG 方法

纠正性 RAG 方法侧重于使用 RAG 技术来纠正错误并提高人工智能代理的准确性。这涉及:

- 提示技术:使用特定提示来指导代理检索相关信息。

- 工具:实施算法和机制,使代理能够评估检索到信息的关联性并生成准确的响应。

- 评估:持续评估代理的性能并进行调整以提高其准确性和效率。

示例:搜索代理中的纠正性 RAG

考虑一个从网络检索信息以回答用户查询的搜索代理。纠正性 RAG 方法可能涉及:

- 提示技术:根据用户输入制定搜索查询。

- 工具:使用自然语言处理和机器学习算法对搜索结果进行排名和过滤。

- 评估:分析用户反馈以识别和纠正检索信息中的不准确之处。

旅行代理中的纠正性 RAG

纠正性 RAG(检索增强生成)增强了人工智能检索和生成信息并纠正任何不准确之处的能力。让我们看看“旅行代理”如何使用纠正性 RAG 方法来提供更准确和相关的旅行推荐。

这涉及:

- 提示技术: 使用特定提示来指导代理检索相关信息。

- 工具: 实施算法和机制,使代理能够评估检索到信息的关联性并生成准确的响应。

- 评估: 持续评估代理的性能并进行调整以提高其准确性和效率。

在“旅行代理”中实施纠正性 RAG 的步骤

- 初始用户交互

- “旅行代理”从用户那里收集初始偏好,例如目的地、旅行日期、预算和兴趣。

-

示例

preferences = { "destination": "Paris", "dates": "2025-04-01 to 2025-04-10", "budget": "moderate", "interests": ["museums", "cuisine"] }

- 信息检索

- “旅行代理”根据用户偏好检索有关航班、住宿、景点和餐厅的信息。

-

示例

flights = search_flights(preferences) hotels = search_hotels(preferences) attractions = search_attractions(preferences)

- 生成初始推荐

- “旅行代理”使用检索到的信息生成个性化行程。

-

示例

itinerary = create_itinerary(flights, hotels, attractions) print("Suggested Itinerary:", itinerary)

- 收集用户反馈

- “旅行代理”征求用户对初始推荐的反馈。

-

示例

feedback = { "liked": ["Louvre Museum"], "disliked": ["Eiffel Tower (too crowded)"] }

- 纠正性 RAG 过程

- 提示技术:“旅行代理”根据用户反馈制定新的搜索查询。

-

示例

if "disliked" in feedback: preferences["avoid"] = feedback["disliked"]

-

- 工具:“旅行代理”使用算法对新的搜索结果进行排名和过滤,根据用户反馈强调关联性。

-

示例

new_attractions = search_attractions(preferences) new_itinerary = create_itinerary(flights, hotels, new_attractions) print("Updated Itinerary:", new_itinerary)

-

- 评估:“旅行代理”通过分析用户反馈并进行必要的调整,持续评估其推荐的关联性和准确性。

-

示例

def adjust_preferences(preferences, feedback): if "liked" in feedback: preferences["favorites"] = feedback["liked"] if "disliked" in feedback: preferences["avoid"] = feedback["disliked"] return preferences preferences = adjust_preferences(preferences, feedback)

-

- 提示技术:“旅行代理”根据用户反馈制定新的搜索查询。

实际示例

以下是一个简化版的 Python 代码示例,其中在“旅行代理”中结合了纠正性 RAG 方法:

class Travel_Agent:

def __init__(self):

self.user_preferences = {}

self.experience_data = []

def gather_preferences(self, preferences):

self.user_preferences = preferences

def retrieve_information(self):

flights = search_flights(self.user_preferences)

hotels = search_hotels(self.user_preferences)

attractions = search_attractions(self.user_preferences)

return flights, hotels, attractions

def generate_recommendations(self):

flights, hotels, attractions = self.retrieve_information()

itinerary = create_itinerary(flights, hotels, attractions)

return itinerary

def adjust_based_on_feedback(self, feedback):

self.experience_data.append(feedback)

self.user_preferences = adjust_preferences(self.user_preferences, feedback)

new_itinerary = self.generate_recommendations()

return new_itinerary

# Example usage

travel_agent = Travel_Agent()

preferences = {

"destination": "Paris",

"dates": "2025-04-01 to 2025-04-10",

"budget": "moderate",

"interests": ["museums", "cuisine"]

}

travel_agent.gather_preferences(preferences)

itinerary = travel_agent.generate_recommendations()

print("Suggested Itinerary:", itinerary)

feedback = {"liked": ["Louvre Museum"], "disliked": ["Eiffel Tower (too crowded)"]}

new_itinerary = travel_agent.adjust_based_on_feedback(feedback)

print("Updated Itinerary:", new_itinerary)

预加载上下文

预加载上下文涉及在处理查询之前将相关上下文或背景信息加载到模型中。这意味着模型从一开始就可以访问这些信息,这有助于它生成更明智的响应,而无需在过程中检索额外数据。

以下是一个简化的示例,说明旅行代理应用程序在 Python 中如何进行预加载上下文:

class TravelAgent:

def __init__(self):

# Pre-load popular destinations and their information

self.context = {

"Paris": {"country": "France", "currency": "Euro", "language": "French", "attractions": ["Eiffel Tower", "Louvre Museum"]},

"Tokyo": {"country": "Japan", "currency": "Yen", "language": "Japanese", "attractions": ["Tokyo Tower", "Shibuya Crossing"]},

"New York": {"country": "USA", "currency": "Dollar", "language": "English", "attractions": ["Statue of Liberty", "Times Square"]},

"Sydney": {"country": "Australia", "currency": "Dollar", "language": "English", "attractions": ["Sydney Opera House", "Bondi Beach"]}

}

def get_destination_info(self, destination):

# Fetch destination information from pre-loaded context

info = self.context.get(destination)

if info:

return f"{destination}:\nCountry: {info['country']}\nCurrency: {info['currency']}\nLanguage: {info['language']}\nAttractions: {', '.join(info['attractions'])}"

else:

return f"Sorry, we don't have information on {destination}."

# Example usage

travel_agent = TravelAgent()

print(travel_agent.get_destination_info("Paris"))

print(travel_agent.get_destination_info("Tokyo"))

解释

-

初始化(

__init__方法):TravelAgent类预加载一个字典,其中包含有关巴黎、东京、纽约和悉尼等热门目的地的信息。该字典包含每个目的地的国家、货币、语言和主要景点等详细信息。 -

检索信息(

get_destination_info方法):当用户查询特定目的地时,get_destination_info方法从预加载的上下文字典中获取相关信息。

通过预加载上下文,旅行代理应用程序可以快速响应用户查询,而无需实时从外部源检索此信息。这使得应用程序更高效、响应更迅速。

在迭代之前以目标引导计划

以目标引导计划涉及从一个明确的目标或目标结果开始。通过预先定义此目标,模型可以在整个迭代过程中将其作为指导原则。这有助于确保每次迭代都更接近实现预期结果,使过程更高效、更集中。

以下是旅行代理在 Python 中如何在迭代之前以目标引导旅行计划的示例:

场景

旅行代理希望为客户规划定制的假期。目标是根据客户的偏好和预算创建最大化客户满意度的旅行行程。

步骤

- 定义客户的偏好和预算。

- 根据这些偏好引导初始计划。

- 迭代以完善计划,优化客户满意度。

Python 代码

class TravelAgent:

def __init__(self, destinations):

self.destinations = destinations

def bootstrap_plan(self, preferences, budget):

plan = []

total_cost = 0

for destination in self.destinations:

if total_cost + destination['cost'] <= budget and self.match_preferences(destination, preferences):

plan.append(destination)

total_cost += destination['cost']

return plan

def match_preferences(self, destination, preferences):

for key, value in preferences.items():

if destination.get(key) != value:

return False

return True

def iterate_plan(self, plan, preferences, budget):

for i in range(len(plan)):

for destination in self.destinations:

if destination not in plan and self.match_preferences(destination, preferences) and self.calculate_cost(plan, destination) <= budget:

plan[i] = destination

break

return plan

def calculate_cost(self, plan, new_destination):

return sum(destination['cost'] for destination in plan) + new_destination['cost']

# Example usage

destinations = [

{"name": "Paris", "cost": 1000, "activity": "sightseeing"},

{"name": "Tokyo", "cost": 1200, "activity": "shopping"},

{"name": "New York", "cost": 900, "activity": "sightseeing"},

{"name": "Sydney", "cost": 1100, "activity": "beach"},

]

preferences = {"activity": "sightseeing"}

budget = 2000

travel_agent = TravelAgent(destinations)

initial_plan = travel_agent.bootstrap_plan(preferences, budget)

print("Initial Plan:", initial_plan)

refined_plan = travel_agent.iterate_plan(initial_plan, preferences, budget)

print("Refined Plan:", refined_plan)

代码解释

-

初始化(

__init__方法):TravelAgent类使用潜在目的地列表进行初始化,每个目的地都有名称、成本和活动类型等属性。 -

引导计划(

bootstrap_plan方法):此方法根据客户的偏好和预算创建初始旅行计划。它遍历目的地列表,如果目的地与客户的偏好匹配并在预算范围内,则将其添加到计划中。 -

匹配偏好(

match_preferences方法):此方法检查目的地是否与客户的偏好匹配。 -

迭代计划(

iterate_plan方法):此方法通过尝试用更好的匹配替换计划中的每个目的地来完善初始计划,同时考虑客户的偏好和预算限制。 -

计算成本(

calculate_cost方法):此方法计算当前计划的总成本,包括潜在的新目的地。

使用示例

- 初始计划:旅行代理根据客户对观光的偏好和 2000 美元的预算创建初始计划。

- 完善计划:旅行代理迭代计划,优化客户的偏好和预算。

通过以明确的目标(例如,最大化客户满意度)引导计划并迭代以完善计划,旅行代理可以为客户创建定制和优化的旅行行程。这种方法确保旅行计划从一开始就符合客户的偏好和预算,并且在每次迭代中都会改进。

利用大型语言模型(LLM)进行重新排名和评分

大型语言模型(LLM)可以通过评估检索到的文档或生成响应的相关性和质量来用于重新排名和评分。其工作原理如下:

检索: 初始检索步骤根据查询获取一组候选文档或响应。

重新排名: LLM 评估这些候选者并根据其相关性和质量对其进行重新排名。此步骤确保首先呈现最相关和高质量的信息。

评分: LLM 为每个候选者分配分数,反映其相关性和质量。这有助于为用户选择最佳响应或文档。

通过利用 LLM 进行重新排名和评分,系统可以提供更准确和上下文相关的信息,从而改善整体用户体验。

以下是一个旅行代理如何使用大型语言模型(LLM)在 Python 中根据用户偏好重新排名和评分旅行目的地的示例:

场景 - 基于偏好的旅行

旅行代理希望根据客户的偏好向其推荐最佳旅行目的地。LLM 将帮助重新排名和评分目的地,以确保呈现最相关的选项。

步骤

- 收集用户偏好。

- 检索潜在旅行目的地列表。

- 使用 LLM 根据用户偏好重新排名和评分目的地。

以下是如何更新上一个示例以使用 Azure OpenAI 服务的方法:

要求

- 您需要拥有 Azure 订阅。

- 创建 Azure OpenAI 资源并获取您的 API 密钥。

Python 代码示例

import requests

import json

class TravelAgent:

def __init__(self, destinations):

self.destinations = destinations

def get_recommendations(self, preferences, api_key, endpoint):

# Generate a prompt for the Azure OpenAI

prompt = self.generate_prompt(preferences)

# Define headers and payload for the request

headers = {

'Content-Type': 'application/json',

'Authorization': f'Bearer {api_key}'

}

payload = {

"prompt": prompt,

"max_tokens": 150,

"temperature": 0.7

}

# Call the Azure OpenAI API to get the re-ranked and scored destinations

response = requests.post(endpoint, headers=headers, json=payload)

response_data = response.json()

# Extract and return the recommendations

recommendations = response_data['choices'][0]['text'].strip().split('\n')

return recommendations

def generate_prompt(self, preferences):

prompt = "Here are the travel destinations ranked and scored based on the following user preferences:\n"

for key, value in preferences.items():

prompt += f"{key}: {value}\n"

prompt += "\nDestinations:\n"

for destination in self.destinations:

prompt += f"- {destination['name']}: {destination['description']}\n"

return prompt

# Example usage

destinations = [

{"name": "Paris", "description": "City of lights, known for its art, fashion, and culture."},

{"name": "Tokyo", "description": "Vibrant city, famous for its modernity and traditional temples."},

{"name": "New York", "description": "The city that never sleeps, with iconic landmarks and diverse culture."},

{"name": "Sydney", "description": "Beautiful harbour city, known for its opera house and stunning beaches."},

]

preferences = {"activity": "sightseeing", "culture": "diverse"}

api_key = 'your_azure_openai_api_key'

endpoint = 'https://your-endpoint.com/openai/deployments/your-deployment-name/completions?api-version=2022-12-01'

travel_agent = TravelAgent(destinations)

recommendations = travel_agent.get_recommendations(preferences, api_key, endpoint)

print("Recommended Destinations:")

for rec in recommendations:

print(rec)

代码解释 - 偏好预订器

-

初始化:

TravelAgent类使用潜在旅行目的地列表进行初始化,每个目的地都具有名称和描述等属性。 -

获取推荐(

get_recommendations方法):此方法根据用户偏好为 Azure OpenAI 服务生成提示,并向 Azure OpenAI API 发出 HTTP POST 请求以获取重新排名和评分的目的地。 -

生成提示(

generate_prompt方法):此方法构建 Azure OpenAI 的提示,包括用户偏好和目的地列表。提示指导模型根据提供的偏好重新排名和评分目的地。 -

API 调用:

requests库用于向 Azure OpenAI API 端点发出 HTTP POST 请求。响应包含重新排名和评分的目的地。 -

使用示例:旅行代理收集用户偏好(例如,对观光和多元文化的兴趣),并使用 Azure OpenAI 服务获取旅行目的地的重新排名和评分推荐。

请务必将 your_azure_openai_api_key 替换为您的实际 Azure OpenAI API 密钥,并将 https://your-endpoint.com/... 替换为您的 Azure OpenAI 部署的实际端点 URL。

通过利用 LLM 进行重新排名和评分,旅行代理可以为客户提供更个性化和相关的旅行推荐,从而提升他们的整体体验。

RAG:提示技术与工具

检索增强生成(RAG)在人工智能代理的开发中既可以作为一种提示技术,也可以作为一种工具。理解两者之间的区别可以帮助您在项目中更有效地利用 RAG。

RAG 作为一种提示技术

它是什么?

- 作为一种提示技术,RAG 涉及制定特定的查询或提示,以指导从大型语料库或数据库中检索相关信息。然后,这些信息用于生成响应或操作。

工作原理

- 制定提示:根据手头的任务或用户输入创建结构良好的提示或查询。

- 检索信息:使用提示从预先存在的知识库或数据集中搜索相关数据。

- 生成响应:将检索到的信息与生成式人工智能模型相结合,以生成全面且连贯的响应。

旅行代理中的示例:

- 用户输入:“我想参观巴黎的博物馆。”

- 提示:“查找巴黎的热门博物馆。”

- 检索到的信息:卢浮宫、奥赛博物馆等详细信息。

- 生成响应:“以下是巴黎的一些热门博物馆:卢浮宫、奥赛博物馆和蓬皮杜中心。”

RAG 作为一种工具

它是什么?

- 作为一种工具,RAG 是一个集成的系统,它自动化了检索和生成过程,使开发人员更容易实现复杂的人工智能功能,而无需为每个查询手动编写提示。

工作原理

- 集成:将 RAG 嵌入到人工智能代理的架构中,使其能够自动处理检索和生成任务。

- 自动化:该工具管理整个过程,从接收用户输入到生成最终响应,无需为每个步骤显式提示。

- 效率:通过简化检索和生成过程来增强代理的性能,从而实现更快、更准确的响应。

旅行代理中的示例:

- 用户输入:“我想参观巴黎的博物馆。”

- RAG 工具:自动检索博物馆信息并生成响应。

- 生成响应:“以下是巴黎的一些热门博物馆:卢浮宫、奥赛博物馆和蓬皮杜中心。”

比较

| 方面 | 提示技术 | 工具 |

|---|---|---|

| 手动 vs 自动 | 为每个查询手动制定提示。 | 检索和生成的自动化过程。 |

| 控制 | 对检索过程提供更多控制。 | 简化并自动化检索和生成。 |

| 灵活性 | 允许根据特定需求定制提示。 | 对于大规模实施更高效。 |

| 复杂性 | 需要编写和调整提示。 | 更容易集成到人工智能代理的架构中。 |

实际示例

提示技术示例

def search_museums_in_paris():

prompt = "Find top museums in Paris"

search_results = search_web(prompt)

return search_results

museums = search_museums_in_paris()

print("Top Museums in Paris:", museums)

工具示例

class Travel_Agent:

def __init__(self):

self.rag_tool = RAGTool()

def get_museums_in_paris(self):

user_input = "I want to visit museums in Paris."

response = self.rag_tool.retrieve_and_generate(user_input)

return response

travel_agent = Travel_Agent()

museums = travel_agent.get_museums_in_paris()

print("Top Museums in Paris:", museums)

评估相关性

评估相关性是人工智能代理性能的一个关键方面。它确保代理检索和生成的信息对用户来说是适当、准确和有用的。让我们探讨如何在人工智能代理中评估相关性,包括实际示例和技术。

评估相关性的关键概念

- 上下文感知:

- 代理必须理解用户查询的上下文才能检索和生成相关信息。

- 示例:如果用户询问“巴黎最好的餐厅”,代理应该考虑用户的偏好,例如菜系类型和预算。

- 准确性:

- 代理提供的信息应事实正确且最新。

- 示例:推荐当前开放且评价良好的餐厅,而不是过时或已关闭的选项。

- 用户意图:

- 代理应推断用户查询背后的意图,以提供最相关的信息。

- 示例:如果用户询问“经济型酒店”,代理应优先考虑价格实惠的选项。

- 反馈循环:

- 持续收集和分析用户反馈有助于代理完善其相关性评估过程。

- 示例:整合用户对先前推荐的评分和反馈,以改进未来的响应。

评估相关性的实用技术

- 相关性评分:

- 根据每个检索到的项目与用户查询和偏好的匹配程度,为其分配一个相关性分数。

-

示例

def relevance_score(item, query): score = 0 if item['category'] in query['interests']: score += 1 if item['price'] <= query['budget']: score += 1 if item['location'] == query['destination']: score += 1 return score

- 过滤和排名:

- 过滤掉不相关的项目,并根据其相关性分数对剩余项目进行排名。

-

示例

def filter_and_rank(items, query): ranked_items = sorted(items, key=lambda item: relevance_score(item, query), reverse=True) return ranked_items[:10] # Return top 10 relevant items

- 自然语言处理(NLP):

- 使用 NLP 技术来理解用户的查询并检索相关信息。

-

示例

def process_query(query): # Use NLP to extract key information from the user's query processed_query = nlp(query) return processed_query

- 用户反馈整合:

- 收集用户对所提供推荐的反馈,并用其调整未来的相关性评估。

-

示例

def adjust_based_on_feedback(feedback, items): for item in items: if item['name'] in feedback['liked']: item['relevance'] += 1 if item['name'] in feedback['disliked']: item['relevance'] -= 1 return items

示例:在旅行代理中评估相关性

以下是“旅行代理”如何评估旅行推荐相关性的实用示例:

class Travel_Agent:

def __init__(self):

self.user_preferences = {}

self.experience_data = []

def gather_preferences(self, preferences):

self.user_preferences = preferences

def retrieve_information(self):

flights = search_flights(self.user_preferences)

hotels = search_hotels(self.user_preferences)

attractions = search_attractions(self.user_preferences)

return flights, hotels, attractions

def generate_recommendations(self):

flights, hotels, attractions = self.retrieve_information()

ranked_hotels = self.filter_and_rank(hotels, self.user_preferences)

itinerary = create_itinerary(flights, ranked_hotels, attractions)

return itinerary

def filter_and_rank(self, items, query):

ranked_items = sorted(items, key=lambda item: self.relevance_score(item, query), reverse=True)

return ranked_items[:10] # Return top 10 relevant items

def relevance_score(self, item, query):

score = 0

if item['category'] in query['interests']:

score += 1

if item['price'] <= query['budget']:

score += 1

if item['location'] == query['destination']:

score += 1

return score

def adjust_based_on_feedback(self, feedback, items):

for item in items:

if item['name'] in feedback['liked']:

item['relevance'] += 1

if item['name'] in feedback['disliked']:

item['relevance'] -= 1

return items

# Example usage

travel_agent = Travel_Agent()

preferences = {

"destination": "Paris",

"dates": "2025-04-01 to 2025-04-10",

"budget": "moderate",

"interests": ["museums", "cuisine"]

}

travel_agent.gather_preferences(preferences)

itinerary = travel_agent.generate_recommendations()

print("Suggested Itinerary:", itinerary)

feedback = {"liked": ["Louvre Museum"], "disliked": ["Eiffel Tower (too crowded)"]}

updated_items = travel_agent.adjust_based_on_feedback(feedback, itinerary['hotels'])

print("Updated Itinerary with Feedback:", updated_items)

意图搜索

意图搜索涉及理解和解释用户查询背后的潜在目的或目标,以检索和生成最相关和有用的信息。这种方法超越了简单的关键词匹配,专注于掌握用户的实际需求和上下文。

意图搜索的关键概念

- 理解用户意图:

- 用户意图可分为三种主要类型:信息性、导航性和事务性。

- 信息性意图:用户寻求有关某个主题的信息(例如,“巴黎最好的博物馆是什么?”)。

- 导航性意图:用户希望导航到特定网站或页面(例如,“卢浮宫官方网站”)。

- 事务性意图:用户旨在执行一项交易,例如预订航班或进行购买(例如,“预订飞往巴黎的航班”)。

- 用户意图可分为三种主要类型:信息性、导航性和事务性。

- 上下文感知:

- 分析用户查询的上下文有助于准确识别其意图。这包括考虑以前的交互、用户偏好和当前查询的具体细节。

- 自然语言处理(NLP):

- NLP 技术用于理解和解释用户提供的自然语言查询。这包括实体识别、情感分析和查询解析等任务。

- 个性化:

- 根据用户的历史记录、偏好和反馈个性化搜索结果,增强了检索到的信息的关联性。

实际示例:在旅行代理中进行意图搜索

让我们以“旅行代理”为例,看看如何实现意图搜索。

-

收集用户偏好

class Travel_Agent: def __init__(self): self.user_preferences = {} def gather_preferences(self, preferences): self.user_preferences = preferences -

理解用户意图

def identify_intent(query): if "book" in query or "purchase" in query: return "transactional" elif "website" in query or "official" in query: return "navigational" else: return "informational" -

上下文感知

def analyze_context(query, user_history): # Combine current query with user history to understand context context = { "current_query": query, "user_history": user_history } return context -

搜索并个性化结果

def search_with_intent(query, preferences, user_history): intent = identify_intent(query) context = analyze_context(query, user_history) if intent == "informational": search_results = search_information(query, preferences) elif intent == "navigational": search_results = search_navigation(query) elif intent == "transactional": search_results = search_transaction(query, preferences) personalized_results = personalize_results(search_results, user_history) return personalized_results def search_information(query, preferences): # Example search logic for informational intent results = search_web(f"best {preferences['interests']} in {preferences['destination']}") return results def search_navigation(query): # Example search logic for navigational intent results = search_web(query) return results def search_transaction(query, preferences): # Example search logic for transactional intent results = search_web(f"book {query} to {preferences['destination']}") return results def personalize_results(results, user_history): # Example personalization logic personalized = [result for result in results if result not in user_history] return personalized[:10] # Return top 10 personalized results -

使用示例

travel_agent = Travel_Agent() preferences = { "destination": "Paris", "interests": ["museums", "cuisine"] } travel_agent.gather_preferences(preferences) user_history = ["Louvre Museum website", "Book flight to Paris"] query = "best museums in Paris" results = search_with_intent(query, preferences, user_history) print("Search Results:", results)

4. 将代码生成作为工具

代码生成代理使用人工智能模型编写和执行代码,解决复杂问题并自动化任务。

代码生成代理

代码生成代理使用生成式人工智能模型来编写和执行代码。这些代理可以通过生成和运行各种编程语言的代码来解决复杂问题、自动化任务并提供有价值的见解。

实际应用

- 自动化代码生成:为特定任务生成代码片段,例如数据分析、网络爬虫或机器学习。

- SQL 作为 RAG:使用 SQL 查询从数据库中检索和操作数据。

- 问题解决:创建和执行代码以解决特定问题,例如优化算法或分析数据。

示例:用于数据分析的代码生成代理

想象一下您正在设计一个代码生成代理。以下是它可能的工作方式:

- 任务:分析数据集以识别趋势和模式。

- 步骤:

- 将数据集加载到数据分析工具中。

- 生成 SQL 查询以过滤和聚合数据。

- 执行查询并检索结果。

- 使用结果生成可视化和见解。

- 所需资源:访问数据集、数据分析工具和 SQL 功能。

- 经验:使用过去的分析结果来提高未来分析的准确性和相关性。

示例:用于旅行代理的代码生成代理

在此示例中,我们将设计一个代码生成代理“旅行代理”,通过生成和执行代码来协助用户规划旅行。该代理可以处理诸如获取旅行选项、过滤结果和使用生成式人工智能编译行程等任务。

代码生成代理概述

- 收集用户偏好:收集用户输入,例如目的地、旅行日期、预算和兴趣。

- 生成代码以获取数据:生成代码片段以检索有关航班、酒店和景点的数据。

- 执行生成的代码:运行生成的代码以获取实时信息。

- 生成行程:将获取的数据编译成个性化旅行计划。

- 根据反馈调整:接收用户反馈并在必要时重新生成代码以完善结果。

逐步实施

-

收集用户偏好

class Travel_Agent: def __init__(self): self.user_preferences = {} def gather_preferences(self, preferences): self.user_preferences = preferences -

生成代码以获取数据

def generate_code_to_fetch_data(preferences): # Example: Generate code to search for flights based on user preferences code = f""" def search_flights(): import requests response = requests.get('https://api.example.com/flights', params={preferences}) return response.json() """ return code def generate_code_to_fetch_hotels(preferences): # Example: Generate code to search for hotels code = f""" def search_hotels(): import requests response = requests.get('https://api.example.com/hotels', params={preferences}) return response.json() """ return code -

执行生成的代码

def execute_code(code): # Execute the generated code using exec exec(code) result = locals() return result travel_agent = Travel_Agent() preferences = { "destination": "Paris", "dates": "2025-04-01 to 2025-04-10", "budget": "moderate", "interests": ["museums", "cuisine"] } travel_agent.gather_preferences(preferences) flight_code = generate_code_to_fetch_data(preferences) hotel_code = generate_code_to_fetch_hotels(preferences) flights = execute_code(flight_code) hotels = execute_code(hotel_code) print("Flight Options:", flights) print("Hotel Options:", hotels) -

生成行程

def generate_itinerary(flights, hotels, attractions): itinerary = { "flights": flights, "hotels": hotels, "attractions": attractions } return itinerary attractions = search_attractions(preferences) itinerary = generate_itinerary(flights, hotels, attractions) print("Suggested Itinerary:", itinerary) -

根据反馈调整

def adjust_based_on_feedback(feedback, preferences): # Adjust preferences based on user feedback if "liked" in feedback: preferences["favorites"] = feedback["liked"] if "disliked" in feedback: preferences["avoid"] = feedback["disliked"] return preferences feedback = {"liked": ["Louvre Museum"], "disliked": ["Eiffel Tower (too crowded)"]} updated_preferences = adjust_based_on_feedback(feedback, preferences) # Regenerate and execute code with updated preferences updated_flight_code = generate_code_to_fetch_data(updated_preferences) updated_hotel_code = generate_code_to_fetch_hotels(updated_preferences) updated_flights = execute_code(updated_flight_code) updated_hotels = execute_code(updated_hotel_code) updated_itinerary = generate_itinerary(updated_flights, updated_hotels, attractions) print("Updated Itinerary:", updated_itinerary)

利用环境感知和推理

根据表的模式,确实可以通过利用环境感知和推理来增强查询生成过程。

以下是如何实现此目的的示例:

- 理解模式:系统将理解表的模式并使用此信息来支持查询生成。

- 根据反馈调整:系统将根据反馈调整用户偏好,并推断模式中的哪些字段需要更新。

- 生成并执行查询:系统将生成并执行查询,以根据新的偏好获取更新的航班和酒店数据。

以下是一个更新的 Python 代码示例,其中包含了这些概念:

def adjust_based_on_feedback(feedback, preferences, schema):

# Adjust preferences based on user feedback

if "liked" in feedback:

preferences["favorites"] = feedback["liked"]

if "disliked" in feedback:

preferences["avoid"] = feedback["disliked"]

# Reasoning based on schema to adjust other related preferences

for field in schema:

if field in preferences:

preferences[field] = adjust_based_on_environment(feedback, field, schema)

return preferences

def adjust_based_on_environment(feedback, field, schema):

# Custom logic to adjust preferences based on schema and feedback

if field in feedback["liked"]:

return schema[field]["positive_adjustment"]

elif field in feedback["disliked"]:

return schema[field]["negative_adjustment"]

return schema[field]["default"]

def generate_code_to_fetch_data(preferences):

# Generate code to fetch flight data based on updated preferences

return f"fetch_flights(preferences={preferences})"

def generate_code_to_fetch_hotels(preferences):

# Generate code to fetch hotel data based on updated preferences

return f"fetch_hotels(preferences={preferences})"

def execute_code(code):

# Simulate execution of code and return mock data

return {"data": f"Executed: {code}"}

def generate_itinerary(flights, hotels, attractions):

# Generate itinerary based on flights, hotels, and attractions

return {"flights": flights, "hotels": hotels, "attractions": attractions}

# Example schema

schema = {

"favorites": {"positive_adjustment": "increase", "negative_adjustment": "decrease", "default": "neutral"},

"avoid": {"positive_adjustment": "decrease", "negative_adjustment": "increase", "default": "neutral"}

}

# Example usage

preferences = {"favorites": "sightseeing", "avoid": "crowded places"}

feedback = {"liked": ["Louvre Museum"], "disliked": ["Eiffel Tower (too crowded)"]}

updated_preferences = adjust_based_on_feedback(feedback, preferences, schema)

# Regenerate and execute code with updated preferences

updated_flight_code = generate_code_to_fetch_data(updated_preferences)

updated_hotel_code = generate_code_to_fetch_hotels(updated_preferences)

updated_flights = execute_code(updated_flight_code)

updated_hotels = execute_code(updated_hotel_code)

updated_itinerary = generate_itinerary(updated_flights, updated_hotels, feedback["liked"])

print("Updated Itinerary:", updated_itinerary)

解释 - 基于反馈的预订

- 模式感知:

schema字典定义了如何根据反馈调整偏好。它包含favorites和avoid等字段,以及相应的调整。 - 调整偏好(

adjust_based_on_feedback方法):此方法根据用户反馈和模式调整偏好。 - 基于环境的调整(

adjust_based_on_environment方法):此方法根据模式和反馈自定义调整。 - 生成并执行查询:系统生成代码以根据调整后的偏好获取更新的航班和酒店数据,并模拟这些查询的执行。

- 生成行程:系统根据新的航班、酒店和景点数据创建更新的行程。

通过使系统具有环境感知能力并基于模式进行推理,它可以生成更准确和相关的查询,从而提供更好的旅行推荐和更个性化的用户体验。

将 SQL 作为检索增强生成(RAG)技术

SQL(结构化查询语言)是与数据库交互的强大工具。当作为检索增强生成(RAG)方法的一部分使用时,SQL 可以从数据库中检索相关数据,以为人工智能代理提供信息并生成响应或操作。让我们探讨如何在旅行代理的背景下将 SQL 用作 RAG 技术。

关键概念

- 数据库交互:

- SQL 用于查询数据库、检索相关信息和操作数据。

- 示例:从旅行数据库中获取航班详情、酒店信息和景点。

- 与 RAG 集成:

- SQL 查询根据用户输入和偏好生成。

- 然后,检索到的数据用于生成个性化推荐或操作。

- 动态查询生成:

- 人工智能代理根据上下文和用户需求生成动态 SQL 查询。

- 示例:自定义 SQL 查询以根据预算、日期和兴趣过滤结果。

应用

- 自动化代码生成:为特定任务生成代码片段。

- SQL 作为 RAG:使用 SQL 查询操作数据。

- 问题解决:创建并执行代码以解决问题。

示例:数据分析代理

- 任务:分析数据集以查找趋势。

- 步骤:

- 加载数据集。

- 生成 SQL 查询以过滤数据。

- 执行查询并检索结果。

- 生成可视化和见解。

- 资源:数据集访问、SQL 功能。

- 经验:使用过去的结果来改进未来的分析。

实际示例:在旅行代理中使用 SQL

-

收集用户偏好

class Travel_Agent: def __init__(self): self.user_preferences = {} def gather_preferences(self, preferences): self.user_preferences = preferences -

生成 SQL 查询

def generate_sql_query(table, preferences): query = f"SELECT * FROM {table} WHERE " conditions = [] for key, value in preferences.items(): conditions.append(f"{key}='{value}'") query += " AND ".join(conditions) return query -

执行 SQL 查询

import sqlite3 def execute_sql_query(query, database="travel.db"): connection = sqlite3.connect(database) cursor = connection.cursor() cursor.execute(query) results = cursor.fetchall() connection.close() return results -

生成推荐

def generate_recommendations(preferences): flight_query = generate_sql_query("flights", preferences) hotel_query = generate_sql_query("hotels", preferences) attraction_query = generate_sql_query("attractions", preferences) flights = execute_sql_query(flight_query) hotels = execute_sql_query(hotel_query) attractions = execute_sql_query(attraction_query) itinerary = { "flights": flights, "hotels": hotels, "attractions": attractions } return itinerary travel_agent = Travel_Agent() preferences = { "destination": "Paris", "dates": "2025-04-01 to 2025-04-10", "budget": "moderate", "interests": ["museums", "cuisine"] } travel_agent.gather_preferences(preferences) itinerary = generate_recommendations(preferences) print("Suggested Itinerary:", itinerary)

SQL 查询示例

-

航班查询

SELECT * FROM flights WHERE destination='Paris' AND dates='2025-04-01 to 2025-04-10' AND budget='moderate'; -

酒店查询

SELECT * FROM hotels WHERE destination='Paris' AND budget='moderate'; -

景点查询

SELECT * FROM attractions WHERE destination='Paris' AND interests='museums, cuisine';

通过将 SQL 作为检索增强生成(RAG)技术的一部分,像“旅行代理”这样的人工智能代理可以动态检索和利用相关数据,以提供准确和个性化的推荐。

元认知示例

为了展示元认知的实现,让我们创建一个简单的代理,它在解决问题时会反思其决策过程。对于这个例子,我们将构建一个系统,其中代理尝试优化酒店选择,然后评估其自身的推理,并在犯错或做出次优选择时调整其策略。

我们将使用一个基本示例来模拟这一点,其中代理根据价格和质量的组合选择酒店,但它会“反思”其决策并相应地进行调整。

这如何说明元认知

- 初始决策:代理将选择最便宜的酒店,而不理解质量影响。

- 反思和评估:在初始选择之后,代理将使用用户反馈检查酒店是否是“糟糕”的选择。如果它发现酒店质量太低,它会反思其推理。

- 调整策略:代理根据其反思调整其策略,从“最便宜”切换到“最高质量”,从而在未来的迭代中改进其决策过程。

以下是一个例子:

class HotelRecommendationAgent:

def __init__(self):

self.previous_choices = [] # Stores the hotels chosen previously

self.corrected_choices = [] # Stores the corrected choices

self.recommendation_strategies = ['cheapest', 'highest_quality'] # Available strategies

def recommend_hotel(self, hotels, strategy):

"""

Recommend a hotel based on the chosen strategy.

The strategy can either be 'cheapest' or 'highest_quality'.

"""

if strategy == 'cheapest':

recommended = min(hotels, key=lambda x: x['price'])

elif strategy == 'highest_quality':

recommended = max(hotels, key=lambda x: x['quality'])

else:

recommended = None

self.previous_choices.append((strategy, recommended))

return recommended

def reflect_on_choice(self):

"""

Reflect on the last choice made and decide if the agent should adjust its strategy.

The agent considers if the previous choice led to a poor outcome.

"""

if not self.previous_choices:

return "No choices made yet."

last_choice_strategy, last_choice = self.previous_choices[-1]

# Let's assume we have some user feedback that tells us whether the last choice was good or not

user_feedback = self.get_user_feedback(last_choice)

if user_feedback == "bad":

# Adjust strategy if the previous choice was unsatisfactory

new_strategy = 'highest_quality' if last_choice_strategy == 'cheapest' else 'cheapest'

self.corrected_choices.append((new_strategy, last_choice))

return f"Reflecting on choice. Adjusting strategy to {new_strategy}."

else:

return "The choice was good. No need to adjust."

def get_user_feedback(self, hotel):

"""

Simulate user feedback based on hotel attributes.

For simplicity, assume if the hotel is too cheap, the feedback is "bad".

If the hotel has quality less than 7, feedback is "bad".

"""

if hotel['price'] < 100 or hotel['quality'] < 7:

return "bad"

return "good"

# Simulate a list of hotels (price and quality)

hotels = [

{'name': 'Budget Inn', 'price': 80, 'quality': 6},

{'name': 'Comfort Suites', 'price': 120, 'quality': 8},

{'name': 'Luxury Stay', 'price': 200, 'quality': 9}

]

# Create an agent

agent = HotelRecommendationAgent()

# Step 1: The agent recommends a hotel using the "cheapest" strategy

recommended_hotel = agent.recommend_hotel(hotels, 'cheapest')

print(f"Recommended hotel (cheapest): {recommended_hotel['name']}")

# Step 2: The agent reflects on the choice and adjusts strategy if necessary

reflection_result = agent.reflect_on_choice()

print(reflection_result)

# Step 3: The agent recommends again, this time using the adjusted strategy

adjusted_recommendation = agent.recommend_hotel(hotels, 'highest_quality')

print(f"Adjusted hotel recommendation (highest_quality): {adjusted_recommendation['name']}")

代理的元认知能力

这里的关键是代理能够:

- 评估其先前的选择和决策过程。

- 根据该反思调整其策略,即元认知在行动中。

这是一种简单的元认知形式,其中系统能够根据内部反馈调整其推理过程。

结论

元认知是一个强大的工具,可以显著增强人工智能代理的能力。通过整合元认知过程,您可以设计出更智能、适应性更强、效率更高的代理。使用额外资源进一步探索人工智能代理中元认知迷人的世界。

对元认知设计模式还有更多疑问吗?

加入 Azure AI Foundry Discord,与其他学习者交流,参加办公时间,并获得您的 AI Agent 问题解答。